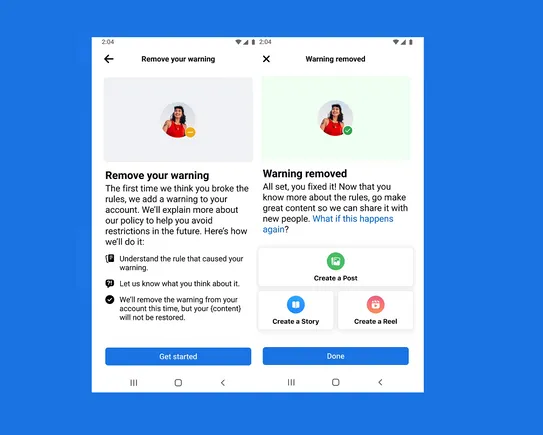

Meta’s looking to help creators avoid penalties, by implementing a new system that will enable creators who violate Facebook’s rules for the first time to complete an education process about the specific policy in question in order to get that warning removed.

As per Meta:

“Now, when a creator violates our Community Standards for the first time, they will receive a notification to complete an in-app educational training about the policy they violated. Upon completion, their warning will be removed from their record and if they avoid another violation for one year, they can participate in the “remove your warning” experience again.”

It’s basically that same as the process that YouTube implemented last year, which enables first-time Community Standards violators to undertake a training course to avoid a channel strike.

Though in both cases, the most extreme violations will still result in immediate penalties.

“Posting content that includes sexual exploitation, the sale of high-risk drugs, or glorification of dangerous organizations and individuals are ineligible for warning removal. We will still remove content when it violates our policies.”

So it’s not a change in policy, as such, just in enforcement, giving those who commit lesser rule violations a means to learn from what could be an honest mistake, as opposed to punishing them with restrictions.

Though if you do commit repeated violations within a 12-month period, even if you do undertake these courses, you’ll still cop account penalties.

The option will give creators more leniency, and aims to help improve understanding, as opposed to a more heavy-handed enforcement approach. That’s been one of the key recommendations from Meta’s independent Oversight Board, that Meta works to provide more explanations and insight into why it’s enacted profile penalties.

Because often, it comes down to misunderstanding, particularly in regards to more opaque elements.

As explained by the Oversight Board:

“People often tell us that Meta has taken down posts calling attention to hate speech for the purposes of condemnation, mockery or awareness-raising because of the inability of automated systems (and sometimes human reviewers) to distinguish between such posts and hate speech itself. To address this, we asked Meta to create a convenient way for users to indicate in their appeal that their post fell into one of these categories.”

In certain circumstances, you can see how Facebook’s more binary definitions of content could lead to misinterpretation. That’s especially true as Meta puts more reliance on automated systems to assist in detection.

So, now you’ll have some recourse if you cop a Facebook penalty, though you will only get one per year. So it’s not a major change, but a helpful one in certain contexts.

Source link