With various major elections being held around the globe in 2024, and concerns around different aspects of the digital information sphere, it feels like we’re on a misinformation collision course, where the lessons of the past are being dismissed or passed over, in favor of whatever ideological or idealistic viewpoint will bring more value to those pulling the strings.

And while the social platforms are saying all the right things, and pledging to improve their security measures ahead of the polls, we’re already seeing signs of significant influence activity, which will inevitably impact voting outcomes. Whether we like it or not.

The first major concern is foreign interference, and the influence of state-based actors on global politics.

This week, for example, Meta reported the discovery of more than 900 fake profiles across its apps, which utilized generative AI profile images, and were effectively being used to spy on foreign journalists and political activists via their in-app activity.

An investigation by the Tech Transparency Project, meanwhile, has found that X has approved various leaders of terror groups for its paid verification checkmarks, giving them not only additional credibility, but also amplifying their posts in the app. Late last year, Meta also reported the removal of two major influence operations operating out of Russia, which involved over 1,600 Facebook accounts, and 700 Facebook Pages, and had sought to influence global opinion about the Ukraine conflict.

This is not unprecedented, or unexpected. But the prevalence and persistence of such campaigns underlines the problem that social networks face in policing misinformation, and ensuring that voters remain informed, ahead of major polls.

Indeed, almost every platform has shared insight into the scope of foreign influence activity:

- Meta also recently reported the detection and removal of a China-based influence operation, which used Facebook and Instagram profiles that posed as members of U.S. military families, and amplified criticism of U.S. foreign policy in regards to Taiwan, Israel, as well as its support of Ukraine. The group also shared a fake petition that criticized U.S. support for Taiwan. The petition reportedly had over 300 signatures.

- In 2022, Google reported that it had disrupted over 50,000 instances of activity across YouTube, Blogger and AdSense (profiles, channels, etc.) conducted by a China-based influence group known as Dragonbridge. Dragonbridge accounts post mostly low-quality, non-political content, while infusing that with pro-China messaging. This approach has been dubbed “Spamouflage” due to the practice of hiding political messages among junk.

- Meta has also uncovered similar, including the removal of a group consisting of over 8,600 Facebook accounts, pages, groups and Instagram accounts in August last year, which had been spreading pro-China messages, while also attacking critics of CCP policies. Meta’s investigations found that the same network was also operating clusters of accounts on Twitter, X, TikTok, Reddit and more.

- X no longer shares the same level of depth into account enforcement actions as it did when it was called Twitter, but it too has reported the detection and removal of various Russian and Iranian based operations designed to influence political debate.

- Even Pinterest reported that it has been targeted by Russian-backed groups seeking to influence foreign elections.

As you can see, Russian and Chinese operations are the most prevalent, which are the same two regions that were tagged with seeking to influence U.S. voters ahead of the 2016 U.S. Presidential election.

And yet, just last week, X gleefully promoted an interview between Tucker Carlson and Russian President Vladimir Putin, giving a mainstream platform to the very ideas that these groups have spent years, and significant technological effort, to suppress.

Which, in some people’s view, is the problem, in that such views shouldn’t be suppressed or restricted. We’re all smart enough to work out what’s right and wrong on our own, we’re all adults, so we should be able to see varying viewpoints, and judge them on their merits.

That’s the view of X owner Elon Musk, who’s repeatedly noted that he wants to enable full and open speech in the app, whether it’s offensive, harmful or even blatant propaganda.

As per Musk:

“All news is to some degree propaganda. Let people decide for themselves.”

In theory, there is a value to this approach, and even a right, in enabling people the freedom to make up their own minds. But as with the 2016 U.S. election campaign, which various investigations have found was at least partly influenced by Russian-backed operations, enabling such can lead to the weaponization of information, for the gain of whomever is more able to steer opinion, using whatever approach their own morals allow.

That can extend to, say, organizing rallies of rival political groups at the same locations and times, in order to further stoke division and angst. As such, it’s not even so much about the information being shared in itself, but the end result of this provocation, which can then sway voters with incorrect or false information, and interfere with the democratic process.

And that could be even worse this time around, with the prevalence of generative AI tools that can create convincing audio and visuals in order to suggest further untruths.

The AI-driven approach is already being employed by various political operatives:

The challenge with this element is that we don’t know what the impact will be, because we’ve never dealt with such realistic, and readily accessible AI fakes before. Most people, of course, can tell the difference between what’s real and what’s been generated by a machine, while crowd-sourced feedback can also be effective in dispelling such quickly.

But it only takes a single resonant image to have an impact, and even if it can be removed, or even debunked, ideas can be embedded through such visuals which could have an impact, even with robust detection and removal processes.

And we don’t really even have such processes fully in place. While the platforms are all working to implement new AI disclosures to combat the use of deepfakes, again, we don’t know what the full effect of such will be, so they can only prepare so much for the expected AI onslaught. And it may not even come from the official campaigns themselves, with thousands of creators now pumping prompts through Dall-E and Midjourney to come up with themed images based on the latest arguments and political discussions in each app.

Which is likely a big reason why Meta’s looking to step away from politics entirely, in order to avoid the scrutiny that will come with the next wave.

Meta has long maintained that political discussion contributes only a minor amount to its overall engagement levels anyway (Meta reported last year that political content makes up less than 3% of total content views in the News Feed), and as such, it now believes that it’s better off stepping away from this element completely.

Last week, Meta outlined its plan to make political content opt-in by default across its app, noting at the same time that it had already effectively reduced exposure to politics on Facebook and IG, with Threads now also set to be subject to the same approach. That won’t stop people from engaging with political posts in its apps, but it will make them harder to see, especially since all users will be opted-out of seeing political content, and most simply won’t bother to manually flip them back on.

At the same time, almost as a counterpoint, X is making an even bigger push on politics. With Musk as the platform’s owner, and its most influential user, his personal political views are driving more discussion and interest, and with Musk firmly planting his flag in the Republican camp, he’ll undoubtedly use all of the resources that he has to amplify key Republican talking points, in an effort to get their candidate into office.

And while X is nowhere near the scale of Facebook, it does still (reportedly) have over 500 million monthly active users, and its influence is significant, beyond the numbers alone.

Couple that with its reduction in moderation staff, and its increasing reliance on crowd-sourced fact-checking (via Community Notes), and it feels a lot like 2016 is happening all over again, with foreign-influenced talking points infiltrating discussion streams and swaying opinions.

And this is before we talk about the potential influence of TikTok, which may or may not be a vector for influence from the Chinese regime.

Whether you view this as a concern or not, the scale of proven Chinese influence operations does suggest that a Chinese-owned app could also be a key vector for the same types of activity. And with the CCP also having various operatives working directly for ByteDance, the owner of TikTok, it’s logical to assume that there may well be some type of effort to extend these programs, in order to reach foreign audiences through the app.

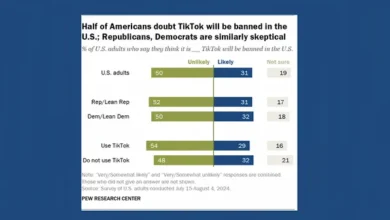

That’s why TikTok remains under scrutiny, and could still face a ban in the U.S. And yet, last week, U.S. President Joe Biden posted his first video in the app, with the potential reach it offers to prospective Democrat voters clearly outweighing these broader concerns.

Indeed, the Biden campaign has posted 12 times to TikTok in less than a week, which suggests that it will be looking to use the app as another messaging tool in the upcoming presidential campaign.

Which will also bring more people seeking political information to the app, where TikTok’s algorithms could show them whatever it chooses.

Essentially, there’s a wide range of possible weak points in the social media information chain, and with 70% of Americans getting at least some of their news input from social apps, it feels like we are going to get a major issue or crisis based on social media-based misinformation at some point.

Ideally, then, we find out ahead of time, as opposed to trying to piece everything together in retrospect, as we did in 2016.

Really, you would hope that we wouldn’t be back here yet again, and there have clearly been improvements in detection across most apps based on the findings of the 2016 campaign.

But some also seem to have forgotten such, or have chosen to dismiss it. Which could pose a major risk.