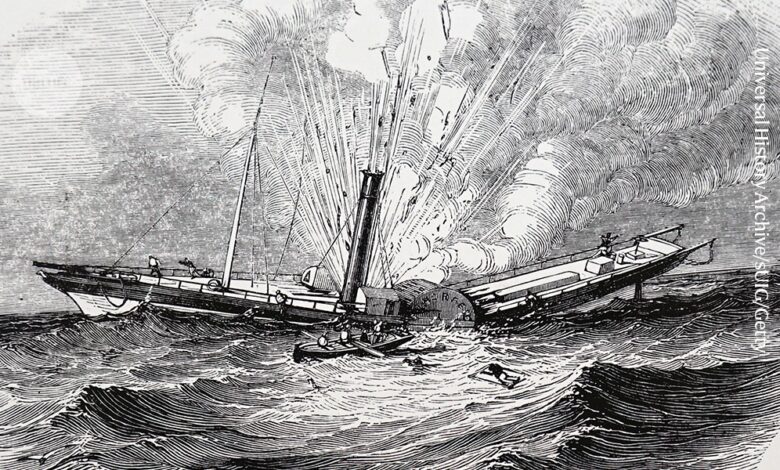

In the nineteenth century, steamboat explosions were common — until they weren’t.Credit: Universal History Archive/UIG/Getty

Wicked Problems: How to Engineer a Better World Guru Madhavan W. W. Norton & Company (2024)

Society relies on engineers to deliver almost everything it uses, from food and water to buildings, transport and telecommunications. But new technologies are often rushed into service, for market reasons, before potential risks and consumer behaviours are understood, and well before sufficient regulation is put in place to protect the public.

Calling all engineers: Nature wants to publish your research

In Wicked Problems, biomedical engineer and US policy adviser Guru Madhavan considers the implications of these human-made vulnerabilities using striking stories — such as a tsunami of molasses, aeroplane crashes, exploding steamships and infants decapitated by airbags. By exploring the interplay between engineers and policymakers, Madhavan shows how engineering can produce problems that policy cannot fix, and how successful systems can create socially unacceptable risks.

Madhavan focuses on ‘wicked problems’, which emerge “when hard, soft and messy problems collide”. Time and time again, a technology becomes profitable and is widely adopted, then its problems become clear and public alarm grows. A period of debate follows, marked by inflamed emotions, news coverage, litigation, denial of responsibility and political impotence. Eventually, corrective mechanisms are developed, implemented and enforced with updated standards. These patterns and problems of rapid technological development are becoming recognized. And there are plenty of modern examples, from social-media platforms and artificial-intelligence systems to self-driving cars.

Deep dive into disasters

There’s much that can be learnt from history, Madhavan tells us. Take the lucrative business of supplying molasses for whisky and food manufacturing, for example. A massive storage tank built in 1915 in Boston, Massachusetts, had exhibited so many signs of being unsafe that the company managers and workers grew accustomed to the leaks and stress groans. One day in 1919, the tank, filled to capacity, ruptured, sending 10.5 million litres of syrup through the streets in a 10-metre-high surge, destroying buildings, killing 21 people and injuring 150.

In the wake of the molasses disaster, the industry rushed to improve the safety of both ‘hard’, physical infrastructure and ‘soft’ operational standards. Risks and vulnerabilities were assessed, better materials were engineered, designs were improved on and safety and maintenance measures were implemented to reduce the risk of pressure-vessel explosions and collapses.

Warning signs were ignored in the lead-up to the 1919 Boston Molasses Disaster.Credit: Science History Images/Alamy

But why was it normal to accept vulnerability — why did workers and managers ignore the warning signs? A social-responsibility code was needed to tackle the soft problems of human error and risk normalization, as well as the vague problems of greed, mismanagement and hubris. Had such a code existed, it would also have spoken to the ‘messy’ problem of colonial exploitation in sugar-cane-exporting countries. Madhavan brings attention to the need for engineers to take on social responsibility.

The world must rethink plans for ageing oil and gas platforms

Madhavan explores six facets of wicked problems — efficiency, vagueness (about the nature of the problem), vulnerability, safety, maintenance and resilience. Risks are impossible to eliminate, but they can be diminished through ‘mindful’ processes (in which workers have time to run through checklists and identify hazards), workplace cultures of humility and continuous learning, and robust, responsive work structures, such as whiteboards on which issues can be recorded, with processes to stop work to check and report errors.

Systems-safety engineer Nancy Leveson at the Massachusetts Institute of Technology in Cambridge, for example, strives to account for social and cultural behaviours in her work. She has studied steam-boiler explosions, which are rare today but were commonplace a century ago. Of course, high-pressure-steam science and pressure-vessels engineering have improved since then — but Leveson points out that the real transition has been in culture. These explosions went from being a tragedy to being unacceptable.

The Wright stuff

Aviation provides a fertile ground for the study of such disasters, and Madhavan makes ample use of examples from this sector. In 1903, aeroplane inventor Orville Wright took on the risks of flying alone; within a decade, he was flying with paying customers. The book goes deep into the 121-year history of aircraft, flight simulators, airmail, navigation technologies, air-traffic management and government interventions. It recounts the early challenges of flying and navigating, and the risk-taking culture that was particularly common among pilots in the first three decades of aviation — resulting in a number of accidents. Flying was so unsafe in the 1960s that in the United States, only one in five people was willing to fly.

Madhavan threads the story of US aviation pioneer Edwin Link throughout the book to explore how a systems approach is needed to address wicked problems. As well as being a pilot, Link was an inventor, entrepreneur and adventurer with a strong sense of responsibility. I was awarded a Link Foundation Energy Fellowship during my PhD studies. The scholarship application asked candidates to discuss how their vision for research was guided by social responsibility — now I know why.

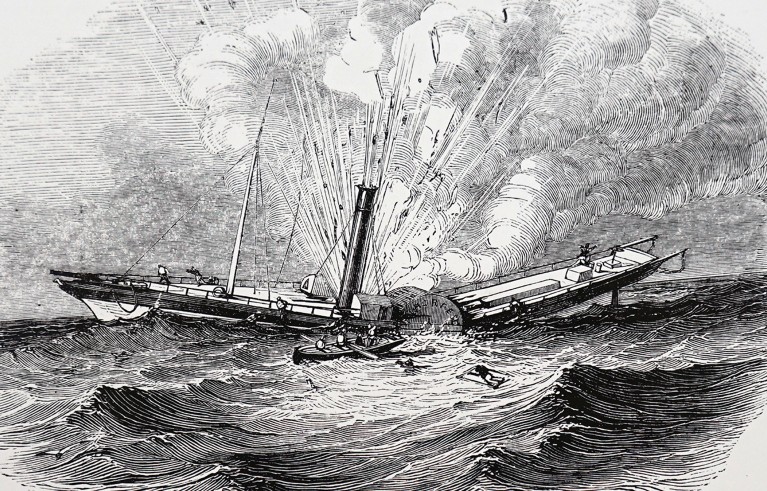

Practice in Edwin Link’s Blue box provided pilots with physical and mental training.Credit: Jon G. Fuller/VWPics/Alamy

Link devised a flight simulator, known as the Blue Box, in which trainees gain experience with the flight deck and the controls and get a feel for different manoeuvres. Importantly, beyond technical skills, he organized the training experience around the mental discipline of the pilot. He understood that ‘blind flight’ — when pilots lose visibility of the horizon and landmarks — often led to a catastrophic loss of control because pilots relied too much on their senses instead of on the plane’s instruments. Confining pilots in the Blue Box without visual cues taught them how to trust the altitude, heading and horizontal situation indicators.

Similarly, Madhavan champions the importance of looking at seemingly technical problems through a wider lens — such as the business, policy and social aspects. He makes the case for investing in engineering-research programmes to develop the holistic approaches and methods needed to address wicked problems. He also calls for the field of engineering to expand beyond its typical role of fostering economic growth through technical innovation and take on the political and social aspects of industrial development. Examples include the Endicott-Johnson Shoes Company, then based in Binghamton, New York, which, in the 1910s, started building affordable houses for its workers and reduced their workday from ten to eight hours.

Wicked Problems is a wake-up call for all engineers to expand their mindset. Although I wish that Madhavan’s book had gone a step further, to lay out how us engineers could do that, it does provide a background and argument to pivot our current risky, if successful, endeavours towards safer systems.

Source link